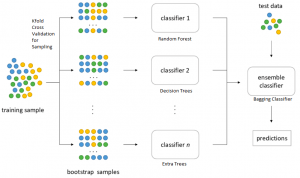

bagging predictors. machine learning

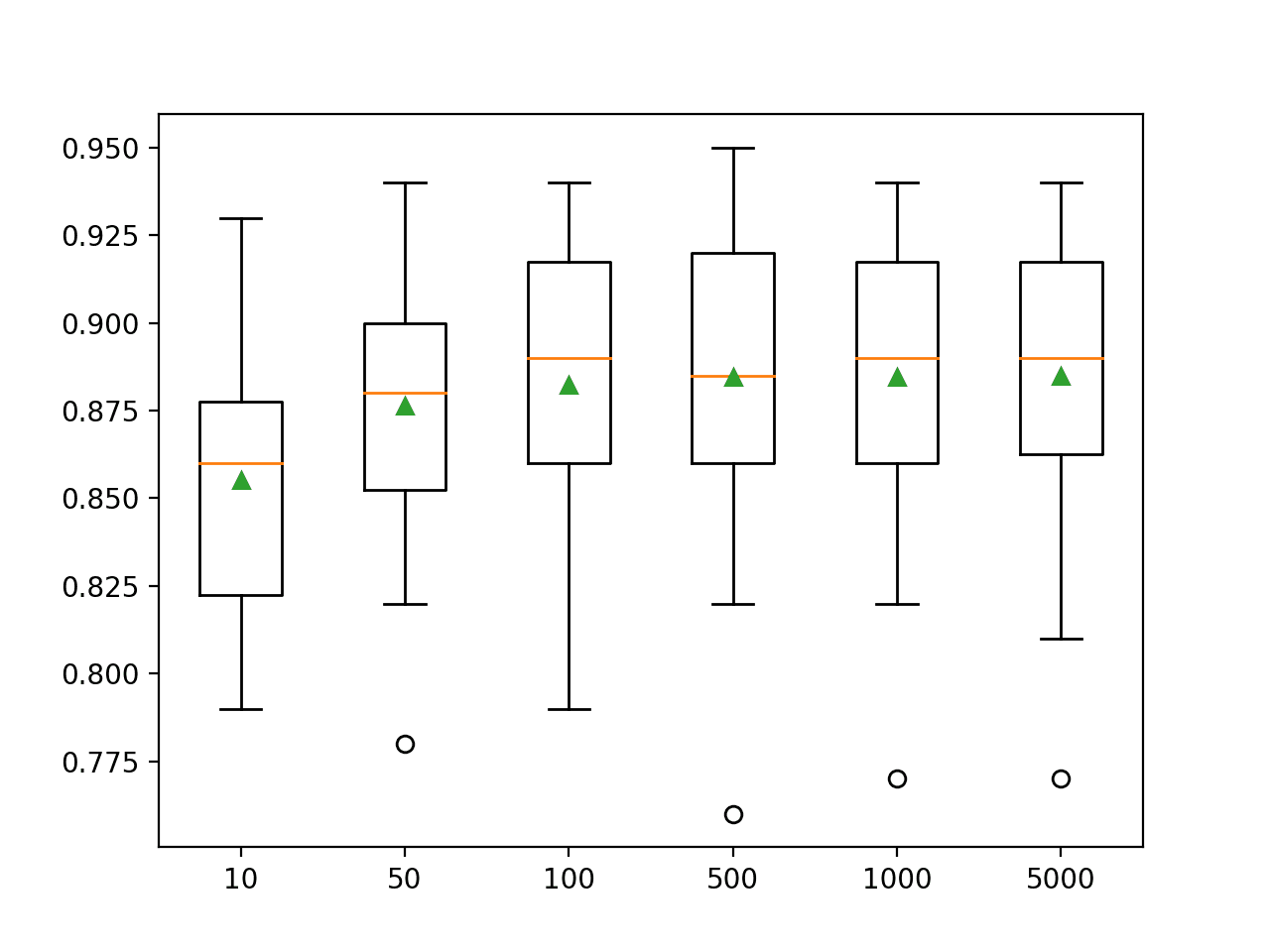

Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy. Given a new dataset calculate the average prediction from each model.

Ensemble Models Bagging And Boosting Dataversity

Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

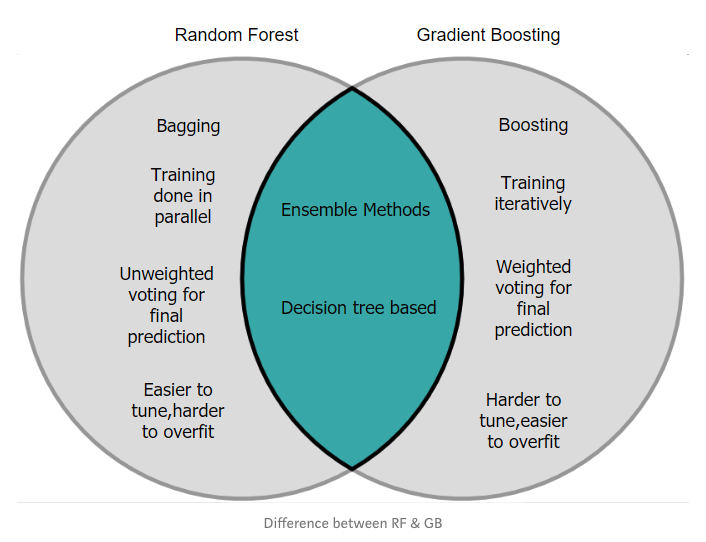

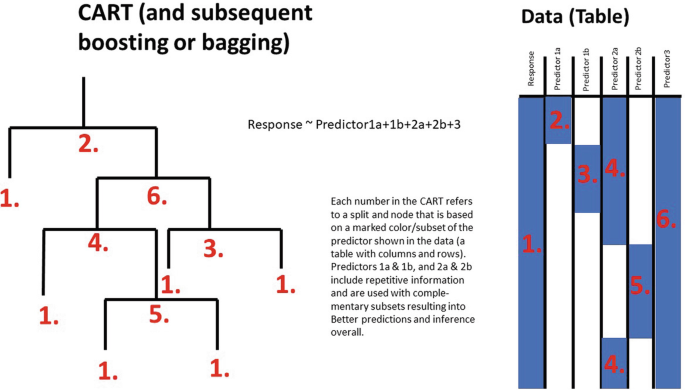

. The change in the models prediction. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. Methods such as Decision Trees can be prone to overfitting on the training set which can lead to wrong predictions on new data.

The vital element is the instability of the prediction method. Bootstrap Aggregation bagging is a ensembling method that. In bagging a random sample.

Learning algorithms that improve their bias dynamically through. Important customer groups can also be determined based on customer behavior and temporal data. Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy.

The bagging aims to reduce variance and overfitting models in machine learning. In other words both bagging and pasting allow training instances to be sampled several times across. Improving the scalability of rule-based evolutionary learning Received.

Bagging predictors is a method for generating multiple versions of a. Customer churn prediction was carried out using AdaBoost classification and BP neural. When sampling is performed without replacement it is called pasting.

For example if we had 5 bagged decision trees that made the following class predictions for a in. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston. Improving nonparametric regression methods by.

Let me briefly define variance and overfitting. Statistics Department University of. Statistics Department University of.

Published 1 August 1996. Brown-bagging Granny Smith apples on trees stops codling moth damage. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. Date Abstract Evolutionary learning techniques are comparable in accuracy with other learning. Manufactured in The Netherlands.

The first part of this paper provides our own perspective view in which the goal is to build self-adaptive learners ie. Manufactured in The Netherlands. The vital element is the instability of the prediction method.

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

An Efficient Mixture Of Deep And Machine Learning Models For Covid 19 Diagnosis In Chest X Ray Images Plos One

Supervised Machine Learning Classification A Guide Built In

Super Learner Machine Learning Algorithms For Compressive Strength Prediction Of High Performance Concrete Lee Structural Concrete Wiley Online Library

Guide To Ensemble Methods Bagging Vs Boosting

How To Develop A Bagging Ensemble With Python

Boosting Bagging And Ensembles In The Real World An Overview Some Explanations And A Practical Synthesis For Holistic Global Wildlife Conservation Applications Based On Machine Learning With Decision Trees Springerlink

Applications Of Artificial Intelligence And Machine Learning Algorithms To Crystallization Chemical Reviews

Bagging And Random Forest Ensemble Algorithms For Machine Learning

Vob Predictors Voting On Bagging Classifications Semantic Scholar

Page 136 Contributed Paper Session Cps Volume 4

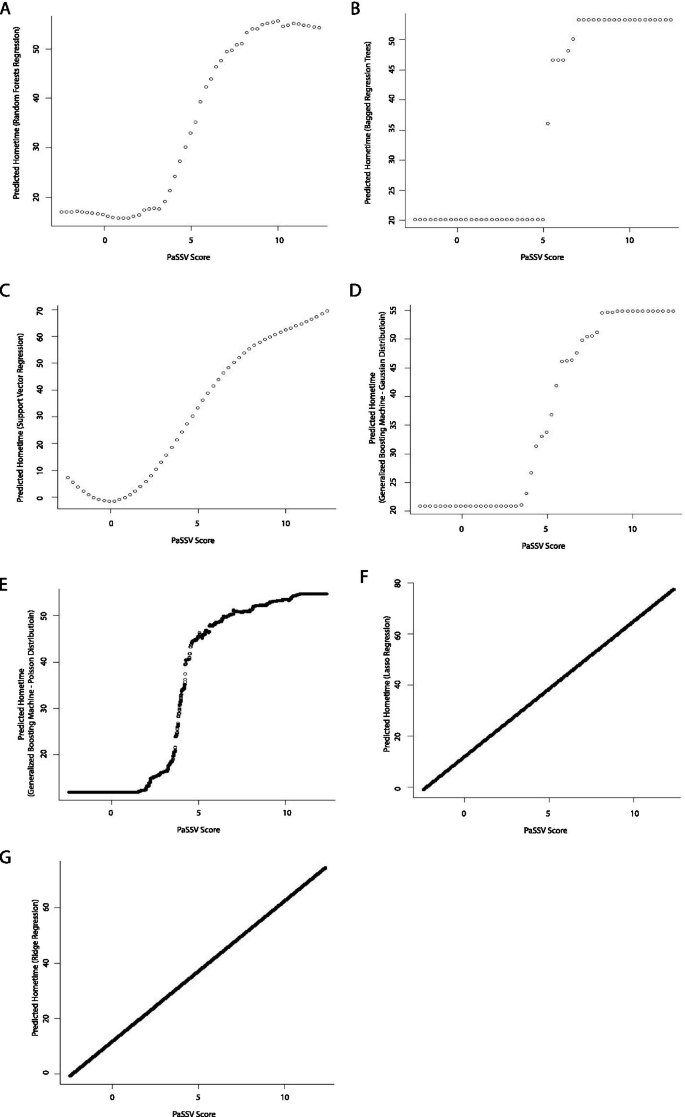

Comparing Regression Modeling Strategies For Predicting Hometime Bmc Medical Research Methodology Full Text

Bagging And Random Forests From Scratch

Learning Ensemble Bagging And Boosting Video Analysis Digital Vidya

What Is Bagging Vs Boosting In Machine Learning

An Introduction To Bagging In Machine Learning Statology

Bagging Classifier Python Code Example Data Analytics

An Empirical Study Of Bagging Predictors For Different Learning Algorithms Semantic Scholar